Here's a short technical article for my fellow Pixinsight learners. It's about some progress I recently made in learning how to reduce background noise in astronomical images. These images, like any images made in low-light situations, have the potential to be plagued by a `grainy' appearance, particularly in the dark background areas. In the parlance of astronomers and amateur astro-imagers, we say that our images commonly exhibit `noise' in the `low-signal-to-noise-ratio (low SNR) areas'. This noise can be reduced by racking up as many hours of exposure time as possible, but there's a limit as to what our schedules (and the weather) will allow.

Noise can also be reduced somewhat in post-processing by using software routines, such as those in Pixinsight. This post is a journal entry of sorts, to record how I managed to smooth the noisy background areas of an image of a galaxy cluster. It allowed me to go from this:

To this:

For me, this was a much better result than I'd gotten before, and it happened because I managed to correctly tweak a setting in one of Pixinsight's noise-reduction routines. Details follow, for any other PI users who might find the information useful.

Image Acquisition

As I described in an earlier post, I'd already taken one stab at shooting Markarian's Chain, a prominent grouping of bright galaxies in the Virgo galaxy cluster. After my first attempt, I found a weeknight in late May 2012 when I could re-shoot the Chain, with proper framing this time! The data were acquired with my ED80 imaging rig: An Orion ED 80 f/7.5 refractor and an SBIG ST-8300M CCD camera, on a Losmandy G-11 mount. As I only had one night to acquire the data, I shot through a clear (`Luminance') filter, so as to make a black-and-white image. I managed to get 32 five-minute subexposures, for a total exposure time of 2 2/3 hours.

Ideally, I'd have liked to get at least several hours of exposure time on a target like this, so as to build up a decent SNR in the faint outer parts of the galaxies, and in the dark background areas. I knew that if I was going to make a final image that showed more than just the bright cores of the galaxies, it would take some wizardry with Pixinsight's noise-reduction settings.

Noise Reduction at the Linear Stage - The General Idea

I followed the same general strategy for this image as I had done with the last several images, namely to reduce noise (in the dim, low-SNR areas) and sharpen details (in the bright, high-SNR areas) while the image was still at the linear stage. In other words, the noise reduction and sharpening (`deconvolution') were to be done while the pixels still had their original brightness values, nearly all of which are too dark to show up well on the computer screen, without having their values mathematically `stretched', which would destroy the linear relationship between their brightness values and the true brightness values of the objects in the scene. This basic strategy was laid out by Pixinsight creator Juan Conejero. The ever-obliging Harry Page made a nice video of this type of workflow, and the technique was updated by Juan for a new version of the key tool.

The general idea is to use a powerful-yet-rather-mysterious tool called Multiscale Median Transform (MMT) to smooth the image to a greater or lesser degree. This smoothing can be (and needs to be) applied more strongly to the dimmer, noisier areas. Conversely, it can be (and needs to be) applied less strongly to the brighter areas. A copy of the image, called a luminance mask, is used in order to apply the process more to the dark areas, and less to the light areas - see Juan's posts and Harry's video for more information on luminance masks.

So, to start at the beginning: I had calibrated my light frames with dark, flat, and bias frames, and aligned, registered, and statistically combined the calibrated subexposures. Here's an autostretched closeup of the noise I had to deal with:

My goal was to try and smooth the noise, although I knew I wouldn't be able to do a perfect job of it. I wanted to smooth it enough, however, to make it worth stretching the image so as to bring out most or all of the faint outer parts of the galaxies. Part of this process involves protecting parts of the image from the noise-reduction tool, and this is the goal of luminance masking.

Luminance Masking - Juan Knows Best

In a recent post, I described my use of PI's Range Selection tool to make luminance masks. I thought it made a lot of sense to build at least two or three separate masks, and then to apply MMT noise reduction to the different zones that would be delineated by these masks. In my `M87 Chain' image, I tried applying different MMT noise reduction settings to three zones: 1) The dark, noisy background, 2) the dim, fairly noisy outer parts of the galaxies, and 3) Deconvolution sharpening, rather than noise reduction, to the bright core areas of the galaxies.

Sometime later, I found myself thinking `wouldn't it be nice to be able to make just one mask, which would automatically apply more protection (from the noise-reduction routine) to the brighter areas, and smoothly reduce the amount of protection applied to the dimmer areas?' After a little while, I slapped my head and said `You fool, that's what Juan taught us to do in the first place! He uses an inverted copy of the image itself as the luminance mask, and this does the automatic masking you're looking for!' This is a really basic idea, and I felt silly for having concocted my separate-masks approach in the first place.

So, I made a copy of the image, inverted it, blurred it with the Convolution tool, and applied it to the image:

The redder areas have more protection applied to them, and the closer-to-black areas have less protection applied to them, so they will undergo more noise reduction, even with only one application of the MMT tool.

First Attempt at MMT - Little Dark Blobs

I love Pixinsight because it's so powerful, and because the people who are really good at it are able to achieve some amazing results. I aspire to understand all of PI's tools at a `master' level someday, if that's even possible. However, some of those tools, like MMT, have a lot of settings to tweak, and it's hard to know what values to use for the various settings. As I describe what I did with MMT in this case, I'll assume the reader has examined the posts by Juan Conejero that I linked above.

When using MMT for noise reduction, one generally needs to check the Noise Reduction box for each of the wavelet layers. Additionally, it seems that MMT noise reduction should be applied more strongly for the small-scale layers, and less strongly for the large-scale layers. (As near as I can tell, this seems to mean using larger Threshold settings on the smaller-scale layers, although for the life of me I don't know what the Threshold numbers mean.) After some iterating, I arrived at these settings:

These settings did manage to smooth the background, but I was left with a number of little dark blobs scattered around the image - I think you can see them here:

Hmm. Close, but no cigar. If only there were a way to get rid of those little dark blobs!

`Adaptive' to the rescue

Casting about for a solution, I read the long tooltip for the `Adaptive' sliders in the MMT noise-reduction dialog. It contained this line: "Increase this parameter when you see isolated, high-contrast, relatively small structures that survive after finding an otherwise good noise threshhold value." This sounded promising. But how to minimize the time I'd have to spend iterating the Adaptive values?

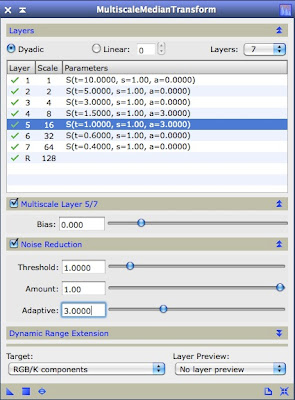

Here's what I did: I used PI's ExtractWaveletLayers script to break the image down into its constituent layers of detail. Zooming in closely to each layer, I noticed that the `dark blobs' seemed to be about 16-32 pixels in size, roughly speaking. So, I gently increased the Adaptive settings for the 16-pixel and 32-pixel wavelet layers in MMT:

Having done this, I got a better, smoother result:

It's a small victory, and I suppose it's nothing to brag about, but for a Pixinsight learner like me, it felt good to be able to smooth a noisy image this much, without the image looking too drastically over-smoothed. I was eventually able to use this noise reduction as one step in the overall processing of my Markarian's Chain image. That image will be the subject of the next post!