|

| IC 1318 d and e and LDN 889, a.k.a. the Butterfly Nebula, imaged from Lassen Peak. Click on the image for a larger version, or click here for full size. |

Only a couple of nights in my week-long trip had worthwhile skies, so I had to abandon my plans to image the Swan nebula (M17) and the Triangulum galaxy (M33), and concentrate on a single object that would be near the zenith for most of the night. An object that appears near the overhead point in the sky (the zenith) is seen through the least possible atmosphere. In this case that meant through the least possible smoke, depending on how the smoke was being blown around by the wind.

During northern-hemisphere summer nights, the region of the zenith is dominated by Cygnus, the Swan. Also known as the `Northern Cross', Cygnus is a grand constellation, one of the few that really looks like its namesake. Right at the heart of the swan is the star Gamma Cygni (a.k.a. Sadr). A good deal of bright emission nebulosity and dark dust can be seen around Gamma Cygni, making it a popular target for imagers. I happened to pick up the September 2012 issue of Sky and Telescope right before my trip, and when I had to pick an imaging target in Cygnus, I thought of the Gamma Cygni area. Sue French and Steve Gottlieb had covered this region in two very nice articles in the September S&T, and Rob Gendler's image, accompanying Steve's article, really got me excited about this area.

According to Steve's article, the `butterfly' is formed by two portions of the IC 1318 emission-nebula complex (IC 1318 d and e), in front of which lies the Lynds dark nebula 889, a mass of dark absorbing dust. The bright emission nebulosity forms the wings of the butterfly, and LDN 889 forms the body, complete with a head that sports two antennae! Like other `emission' nebulae, the bright material glows because of the excitation of the hydrogen atoms of which it's made. IC 1318 is a star-forming region, and ultraviolet light from hot, massive, young stars causes the hydrogen atoms to glow, a little like a fluorescent light tube or a fluorescent mineral. LDN 889 consists of microscope grains of interstellar dust, which absorb the light from the nebula. (The sky over the Lassen Peak region often contained clouds of smoke that dimmed the stars in much the same way.)

Data Acquisition

On two nights, the sky was acceptably transparent for imaging, and I managed to acquire three hours of data through a clear (`Luminance') filter, in 5-minute subexposures. The last night of the trip yielded a very nice sky, thanks to some fortuitous wind patterns, with the Milky blazing bright and `sugary' overhead. Two of my three hours of data were acquired under that sky.

I would have liked to shoot some color data, but equipment issues put an end to that idea. Perhaps foolishly, I decided to try and `drive' my mount from my laptop. Maxim DL was able to talk to the mount and order it to slew around the sky, but I kept having a problem with `backwards slews' in the western part of the sky. I'd have shot an additional 3 or 4 hours of data on the final, clear night if I hadn't been trying to debug this problem. Oh well, I'll get it sorted eventually, and at least I got three hours of luminance.

Pixinsight processing:

The data for this image followed my standard Pixinsight processing routine for a luminance-only image:

Room for Improvement

(Pixinsight geekery ahead...)

Naturally, I would have liked to acquire more data, including color data. Processing-wise, I noticed that some small-scale, `salt-and-pepper-like' noise was introduced somewhere in the processing. This probably happened during the Histogram Transformation or the Local Histogram Equalization, despite my use of a luminance mask. The luminance mask was made in the usual way, by applying an auto-STF to a copy of the image (via HT). I wonder if I should have done a more elaborate intensity transformation when I made the luminance mask, so as to protect the dark areas better, and to get a more effective deconvolution in the bright areas.

After the initial star-shrinking, which worked mostly on the small stars, I tried to build a new star mask for the larger, more bloated stars, but after a lot of experimentation, I hadn't gotten much of a result. I decided to post the image as-is, but I still dream of dealing with the large stars someday.

|

| The Reading fire, one of the fires that turned the blue sky brown for much of this year's trip. (Image credit: National Park Service, Lassen Volcanic National Park) |

Data Acquisition

On two nights, the sky was acceptably transparent for imaging, and I managed to acquire three hours of data through a clear (`Luminance') filter, in 5-minute subexposures. The last night of the trip yielded a very nice sky, thanks to some fortuitous wind patterns, with the Milky blazing bright and `sugary' overhead. Two of my three hours of data were acquired under that sky.

I would have liked to shoot some color data, but equipment issues put an end to that idea. Perhaps foolishly, I decided to try and `drive' my mount from my laptop. Maxim DL was able to talk to the mount and order it to slew around the sky, but I kept having a problem with `backwards slews' in the western part of the sky. I'd have shot an additional 3 or 4 hours of data on the final, clear night if I hadn't been trying to debug this problem. Oh well, I'll get it sorted eventually, and at least I got three hours of luminance.

Pixinsight processing:

The data for this image followed my standard Pixinsight processing routine for a luminance-only image:

- Calibrate subexposures with the BatchPreprocessing script

- Register and stack the calibrated subexposures

- Deconvolution to sharpen the bright, high-signal-to-noise-ratio (high SNR) areas

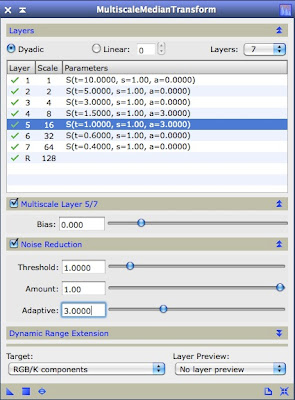

- Multiscale Median Transform to smooth the dark (low SNR) areas

- Stretch the brightness values of the pixels with Histogram Transformation and Local Histogram Equalization

- Shrinking (actually more like dimming) stars with StarMask and Morphological Transformation)

- Cropping, conversion to standard ICC color profile for web publishing, and saving as JPEG.

Room for Improvement

(Pixinsight geekery ahead...)

Naturally, I would have liked to acquire more data, including color data. Processing-wise, I noticed that some small-scale, `salt-and-pepper-like' noise was introduced somewhere in the processing. This probably happened during the Histogram Transformation or the Local Histogram Equalization, despite my use of a luminance mask. The luminance mask was made in the usual way, by applying an auto-STF to a copy of the image (via HT). I wonder if I should have done a more elaborate intensity transformation when I made the luminance mask, so as to protect the dark areas better, and to get a more effective deconvolution in the bright areas.

After the initial star-shrinking, which worked mostly on the small stars, I tried to build a new star mask for the larger, more bloated stars, but after a lot of experimentation, I hadn't gotten much of a result. I decided to post the image as-is, but I still dream of dealing with the large stars someday.